AI Glasses: Opening up a New Horizon of Smart Life

Tuesday, September 16, 2025

I. Technical Architecture and Core Components of AI Glasses

(A) Hardware Architecture: End-to-End Design from Sensing to Display

The hardware system of AI glasses must strike a balance between portability and functionality, with its core composed of five major modules.

- Sensing Unit: It includes high-definition cameras (with 8 - 48 megapixels, supporting 30 - 60 frames per second shooting), infrared depth sensors (such as the ToF technology in Microsoft Azure Kinect), ambient light sensors, and Inertial Measurement Units (IMUs). These components work together to capture 3D spatial information and dynamic gestures. For example, the binocular stereo vision system of Huawei smart glasses can achieve a gesture recognition accuracy of ±3° with a false touch rate of less than 0.5 times per hour. Through these sensors, AI glasses can perceive the user's environment and actions in real-time, providing a data foundation for subsequent intelligent interactions.

- Display Module: Mainstream technologies such as micro - OLED or LCoS are used, with a resolution of 1920×1080 or higher, a Field of View (FOV) between 30° - 120° (industrial - grade products like Vuzix Shield have an FOV of 40°), and a brightness of ≥500 nits to adapt to outdoor environments. The application of waveguide technology allows the lens thickness to be controlled within 3mm, and the weight to be reduced to 80 - 150 grams, approaching the wearing experience of traditional glasses. The display module is like the "window" of AI glasses, presenting processed information to users in a clear and intuitive way. High resolution and an appropriate FOV ensure a good visual experience, while the lightweight design guarantees wearing comfort.

- Processing Core: Equipped with dedicated AI chips (such as Qualcomm XR1, Huawei Ascend 310B), it supports 10 trillion operations per second (TOPS) of computing power. Lightweight models for object detection and semantic segmentation can be run locally, with a latency controlled within 20ms to avoid dizziness. The processing core is the "brain" of AI glasses. Powerful computing power can quickly process and analyze data collected by sensors, ensuring the real - time and smooth operation of various intelligent functions. Low - latency processing capabilities are crucial for the user experience, avoiding operational inefficiencies and dizziness caused by delays.

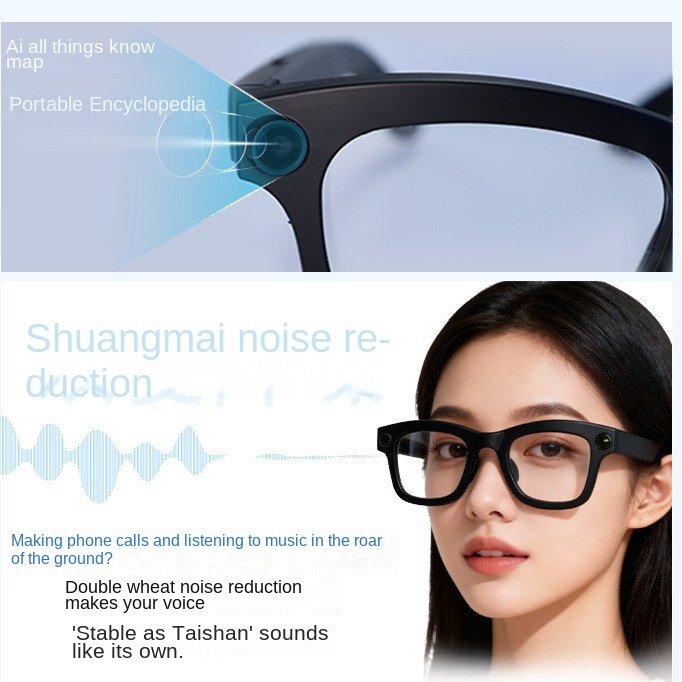

- Interaction Interface: Integrating bone - conduction headphones (with a call clarity of over 95%), touchpads, voice microphone arrays (supporting far - field voice pickup within 5 meters), and eye - tracking (with an accuracy of 0.5° and a sampling rate of 120Hz), it enables multi - modal command input. The interaction interface builds a communication bridge between users and AI glasses. The integration of multiple interaction methods allows users to choose the most convenient way to interact with the glasses according to different scenarios and their own needs. For example, in a sports scenario, users can operate the glasses through voice commands; when the hands are busy, the eye - tracking function can play an important role.

- Battery Life and Communication: It is equipped with a 1000 - 2000mAh battery, providing a single - charge battery life of 3 - 8 hours (depending on the usage scenario), and supports Type - C fast charging and wireless charging. It is also equipped with Wi - Fi 6, Bluetooth 5.2, and optional 4G/5G modules to ensure real - time cloud data interaction. Battery life and communication functions are the guarantees for AI glasses to work continuously and obtain external information. Although the current battery life still needs to be further improved, fast charging and wireless charging technologies alleviate users' battery anxiety to some extent. The powerful communication module enables AI glasses to interact with cloud servers in real - time, obtaining the latest information and services.

- (B) Software System: AI - Driven Scene Understanding Engine

- The software layer is the "intelligent hub" of AI glasses, with its core capabilities reflected in the following aspects.

- Real - Time Scene Analysis: Based on lightweight deep - learning models (such as MobileNet - SSD, YOLOv8 - tiny), it can achieve object detection (supporting over 1000 types of objects), text recognition (with an OCR accuracy of 98%), and semantic segmentation (pixel - level scene classification) at 30 frames per second locally. For example, industrial - grade AI glasses can identify the position of the pointer on a device dashboard within 0.5 seconds and determine whether it exceeds the threshold. Through real - time scene analysis, AI glasses can understand the user's environment, identify various objects, texts, and other information in the user's field of view, thus providing more targeted services. For example, in a shopping scenario, it can quickly identify products and provide relevant information; when reading foreign language materials, it can perform real - time text recognition and translation.

- Multi - Modal Interaction Algorithms: By integrating voice, gesture, and eye - movement data through the attention mechanism, it can understand user intentions. For example, the "look at the button + pinch gesture" triggers a confirmation operation, with an error rate of less than 1%. Voice command recognition supports custom industrial terms and still has an accuracy of 90% in an 85 - decibel noisy environment. Multi - modal interaction algorithms enable AI glasses to comprehensively analyze data from multiple interaction methods, more accurately understanding user intentions. Different interaction methods have their own advantages in different scenarios. Through the integration of algorithms, a more natural and smooth interaction experience can be provided for users. For example, in a noisy factory environment, voice commands may be interfered with, and at this time, gesture and eye - movement interactions can play an important role.

- AR Space Anchoring: Using SLAM (Simultaneous Localization and Mapping) technology to build a 3D map of the environment, virtual information (such as repair steps, navigation arrows) can be stably overlaid in the physical space, with a position drift of ≤2cm/hour, ensuring long - term interaction stability. AR space anchoring technology is the key to achieving an augmented reality experience. By building an environmental map, AI glasses can accurately overlay virtual information at the corresponding positions in the real - world scene and maintain stability. This has a wide range of applications in industrial maintenance, intelligent navigation, and other fields. For example, maintenance personnel can see virtual repair steps of equipment through glasses, and navigation arrows can be accurately overlaid on real roads during navigation.

- Cloud Collaboration Framework: Adopting a hybrid architecture of "edge computing + cloud reasoning", local tasks with high real - time requirements (such as gesture recognition) are processed locally, and complex tasks (such as rare fault diagnosis) call cloud - based large models (such as GPT - 4V, Tongyi Qianwen Multimodal Edition), with a response latency controlled within 500ms. The cloud collaboration framework takes full advantage of the strengths of local and cloud computing. Local edge computing can quickly process some simple tasks with high real - time requirements, while for complex tasks, more accurate results can be obtained by calling powerful cloud - based large models for reasoning. This hybrid architecture not only ensures response speed but also improves the intelligent processing capabilities of AI glasses.

- II. Core Functions and Technological Breakthroughs of AI Glasses

- (A) Core Function Matrix

- Augmented Reality Information Overlay: Dynamically display digital content related to the scene in the user's field of view.

- Navigation Scenario: Overlay arrow indicators and distance information, with turn prompts popping up 30 meters in advance, which is 40% more efficient than mobile phone navigation. For example, when walking in an unfamiliar city, AI glasses can directly display navigation arrows in the user's field of view, indicating the turning direction and distance. Users do not need to look down at their mobile phones, greatly improving the convenience and safety of navigation.

- Industrial Scenario: Mark component names, parameters, and status on equipment, and display step - by - step animation during maintenance, reducing novice operation error rates by 60%. In industrial manufacturing and maintenance, workers wearing AI glasses can clearly see the relevant information of each component on the equipment. During maintenance, they can also understand the detailed maintenance steps through animation demonstrations, which is of great significance for improving work efficiency and reducing novice error rates.

- Intelligent Visual Analysis: Automatically analyze the content of the screen and provide decision - making support.

- Text Processing: Real - time translation of foreign language signs (supporting over 50 languages, with an accuracy of 95%), identification of QR codes/barcodes, and information parsing. In cross - border travel or business communication, the real - time translation function of AI glasses can help users easily understand foreign language signs and text content. At the same time, the fast and accurate QR code and barcode recognition function also provides convenience for users in shopping, logistics, and other scenarios.

- Defect Detection: In photovoltaic panel inspection, it can automatically identify hidden cracks and virtual soldering with an accuracy of 99.2%, far exceeding manual visual inspection. In industrial production, product quality inspection is crucial. Using intelligent visual analysis technology, AI glasses can quickly and accurately detect product defects, improve production quality and efficiency, and reduce errors and costs of manual inspection.

- Hands - Free Interaction: A hands - free operation mode.

- Gesture Control: Complete interface operations through more than 10 gestures such as pinching, sliding, and pointing, and can still be recognized in industrial scenarios where gloves are worn. In some scenarios where the hands are inconvenient to operate, such as in industrial production where workers wear protective gloves or during sports, the gesture control function allows users to operate AI glasses through simple gestures to achieve various functions, greatly improving operational convenience.

- Eye - Movement Interaction: Automatically select menu options by looking at them for 2 seconds, which is suitable for scenarios such as surgeries and assembly where the hands are occupied. In medical surgeries and precision assembly scenarios that require high - precision hand operations, the eye - movement interaction function plays an important role. Doctors and assembly workers can select menu options or complete some operations by looking, avoiding contamination or errors that may be caused by manual operations.

- Remote Collaboration Support: Achieve remote guidance through first - person live broadcast and AR annotation.

- Experts can see the real - time picture at the remote end and mark key points with virtual arrows/circles, with a synchronization rate of 98% between the annotation and the physical object. In industrial maintenance, technical guidance, and other scenarios, when on - site personnel encounter problems, they can transmit the real - time first - person picture to remote experts through AI glasses. Experts can see the on - site situation remotely and point out the key problems for on - site personnel through virtual annotations, which greatly improves the efficiency of problem - solving.

- Voice calls and picture annotations are synchronized, and the communication efficiency is 50% higher than that of traditional video conferencing. Compared with traditional video conferencing, the remote collaboration function of AI glasses can not only transmit real - time pictures but also synchronize voice calls and picture annotations. Both parties can communicate more intuitively, improving communication efficiency and accuracy.

- (B) Key Technological Breakthroughs

- Low - Power High - Performance Computing: Using a heterogeneous computing architecture (CPU + NPU + GPU), the power consumption of tasks such as object detection has been reduced from 5W to 1.2W, supporting 8 hours of continuous operation. While ensuring the powerful functions of AI glasses, reducing power consumption has always been the focus of technological research and development. By adopting a heterogeneous computing architecture and reasonably allocating different computing tasks to different computing units, power consumption is effectively reduced, and the battery life of the device is extended, allowing users to use AI glasses for a longer time.

- Adaptation to Low - Light Environments: By integrating infrared imaging and visible - light enhancement algorithms, objects can still be clearly recognized in a low - light environment of 0.1lux, meeting the needs of night inspections. In some special scenarios, such as night industrial inspections and outdoor adventures, the low - light environment poses challenges to visual recognition. By integrating infrared imaging and visible - light enhancement algorithms, AI glasses can clearly recognize objects in low - light environments, providing reliable visual support for users.

- Lightweight Optical Solutions: Using a combination of diffractive waveguides and micro - displays, the thickness of the optical module has been reduced from 10mm to 3mm, and the weight has been reduced by 60%, significantly improving wearing comfort. In order to make AI glasses closer to the wearing experience of traditional glasses, the research and development of lightweight optical solutions are crucial. By using a combination of diffractive waveguides and micro - displays, the thickness and weight of the optical module are reduced, making AI glasses more comfortable to wear and reducing the burden on users during long - term wear.

- Privacy Protection Mechanisms: Store sensitive data (such as medical images) locally, and use federated learning technology to train models to avoid uploading original data, which complies with regulations such as GDPR and ISO 27001. With the increasing attention to data security and privacy protection, AI glasses also pay attention to the establishment of privacy protection mechanisms in technological research and development. By storing sensitive data locally and using technologies such as federated learning for model training, the uploading of original data is avoided, ensuring that user data security and privacy comply with relevant regulatory requirements.

- III. Industry Implementation Cases of AI Glasses

- (A) Industrial Manufacturing: Intelligent Inspection and Remote Operation and Maintenance

- Sany Heavy Industry Smart Factory: Deployed Vuzix Shield AI glasses. Workers wearing them can automatically identify equipment models, retrieve 3D assembly drawings and operation manuals, and AR animations demonstrate the bolt - tightening sequence and torque requirements step by step. For complex faults, connect with remote experts through first - person live broadcast. Experts mark the fault points and repair steps, increasing the first - time repair rate from 65% to 92% and shortening the average repair time from 45 minutes to 18 minutes. In the Sany Heavy Industry smart factory, the application of AI glasses has greatly improved production and maintenance efficiency. Workers can quickly obtain equipment information and operation guidance through glasses. When encountering complex problems, they can receive support from remote experts in a timely manner, reducing downtime caused by faults and improving production efficiency.

- State Grid Substation Inspection: Customized AI glasses integrate an infrared thermal imaging module. During inspections, they automatically scan equipment (such as transformers and circuit breakers), analyze temperature distribution in real - time (automatically alarm when the temperature difference exceeds 5℃), read instrument values, and compare them with standard values to generate an electronic inspection report. Compared with traditional manual inspections, the efficiency has increased by 3 times, the missed inspection rate has dropped from 8% to 0.5%, and more than 12 power outage accidents are reduced each year. State Grid uses customized AI glasses for substation inspections, realizing the intelligence and automation of inspection work. Through the infrared thermal imaging module and intelligent analysis function, potential problems of equipment can be detected in a timely manner, early warnings and treatments can be carried out, ensuring the safe and stable operation of the power grid. At the same time, the inspection efficiency is greatly improved, and the labor cost is reduced.

- (B) Healthcare: Precise Assistance and Remote Diagnosis and Treatment

- Neurosurgery at Beijing Tiantan Hospital: Doctors wear a customized version of Microsoft HoloLens 2. Before surgery, patient CT/MRI image data is imported into the system. During surgery, through AR technology, the virtual 3D brain model is precisely matched with the patient's actual brain, and key information such as tumor location and blood vessel distribution is displayed in real - time, assisting doctors in more accurately removing tumors. The average surgery time is shortened by 20%, and the tumor resection rate is increased by 15%. In neurosurgery, precision is crucial. AI glasses provide doctors with real - time visual assistance information, helping doctors more accurately understand the patient's condition, formulate surgical plans, and improve the success rate and treatment effect of surgeries.

- Remote Medical Consultation: Doctors in primary hospitals wear AI glasses to transmit real - time patient conditions, examination data, and other information to superior experts. Experts conduct remote guidance and diagnosis through the first - person view of the glasses. In medical assistance in remote areas, AI glasses break through geographical limitations, enabling patients to receive expert diagnosis and treatment advice in a timely manner, improving the utilization efficiency of medical resources and the medical service level in remote areas. The application of AI glasses in remote medical consultations provides an effective solution to the uneven distribution of medical resources. By transmitting patient information in real - time, superior experts can provide guidance to primary - level doctors across distances, enabling patients to enjoy high - level medical services locally.

- (C) Consumer - Level Applications: Smart Life and Assisted Mobility

- Intelligent Navigation and Travel Guidance: When users travel, AI glasses can provide real - time navigation information, directly displaying route guidance in the user's field of view. At tourist attractions, it can automatically identify attractions and provide detailed introductions and historical and cultural information, bringing a more convenient and rich travel experience. In daily life, the intelligent navigation function of AI glasses makes travel easier. In the travel scenario, its travel guidance function is like a personal tour guide, providing users with rich attraction information, increasing the